What is Edge Computing?

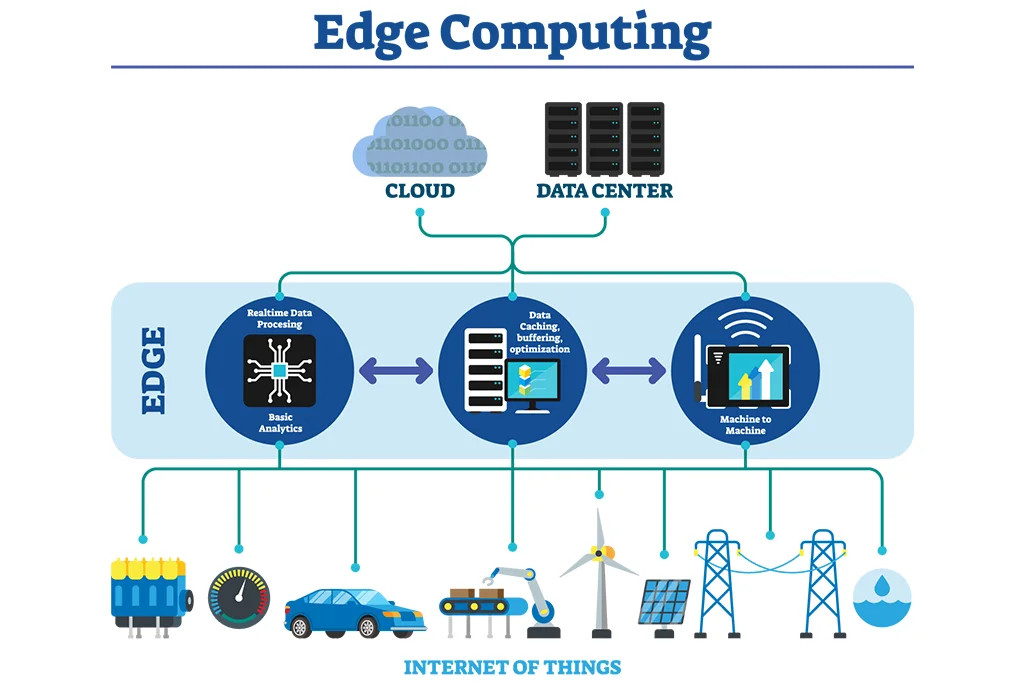

Edge computing is a technology that processes data closer to its source—at the "edge" of the network—rather than relying on distant cloud servers. This decentralized approach enhances performance by reducing latency, saving bandwidth, and improving reliability, making it ideal for real-time applications.

Key Benefits of Edge Computing

Reduced Latency

By processing data locally, edge computing minimizes delays, enabling real-time decision-making for critical applications like autonomous vehicles or industrial automation.Optimized Bandwidth

Less data needs to travel to centralized servers, reducing bandwidth usage and ensuring more efficient data transfer across networks.Improved Reliability

Edge computing continues to function even with intermittent internet connectivity, ensuring constant data processing without dependency on centralized systems.Enhanced Security & Privacy

Sensitive data is processed locally, reducing exposure to security risks and enhancing privacy, particularly for industries with strict compliance requirements.Cost-Effective

By offloading processing to edge devices, organizations can reduce cloud storage and bandwidth costs, offering a more affordable solution for managing large volumes of data.

Common Use Cases

IoT Devices: Smart home devices, wearables, and connected appliances benefit from faster, local processing for improved performance.

Autonomous Vehicles: Edge computing allows self-driving cars to process data from sensors and cameras in real time, making instant decisions for navigation and safety.

Healthcare: Medical devices process data on-site, providing immediate diagnostics and reducing dependence on cloud-based systems.

Industrial Automation: In manufacturing, edge computing helps monitor machinery, perform predictive maintenance, and optimize operations by processing data locally.

Smart Cities: Traffic management, energy grids, and public safety systems leverage edge computing to analyze data quickly and efficiently.